Connect GPU to SSD directly to accelerate AI processing

Conquering artificial intelligence technology is still a tough challenge. But recently, IBM, Nvidia and researchers have a “crazy” solution to help people working on machine learning breathe a little easier: a direct connection between GPU and SSD.

In a newly published study, the idea is called Big accelerator Memory (BaM) and involves connecting a GPU directly to a large number of SSDs. This helps to solve bottlenecks in machine learning training and other intensive tasks.

In their study, the scientists said:BaM minimizes I/O bandwidth amplification by allowing GPU threads to read or write small amounts of data on demand, as predefined by the computer.”

Processing acceleration and stability

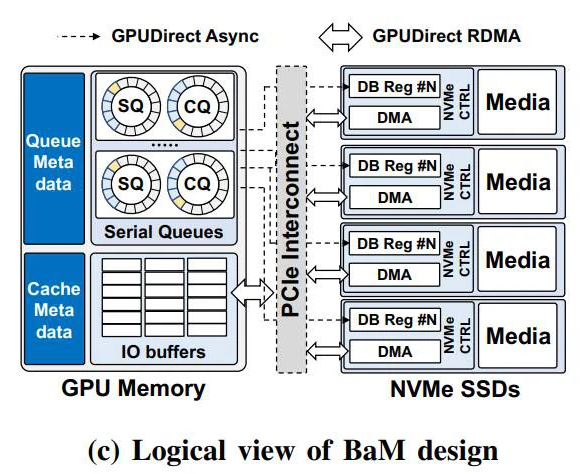

“The purpose of BaM is to expand GPU memory capacity and increase storage memory access bandwidth while providing a high degree of abstraction for GPU threads for easy on-demand, in-demand access. detailing to huge data structures in the memory hierarchy.”

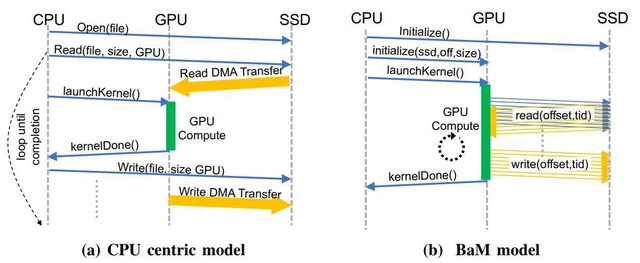

Comparison between CPU-centric design and BaM’s new design

The ultimate goal is to reduce reliance on Nvidia GPUs on hardware-accelerated processing for general-purpose CPUs. Normally, the CPU will be the center of processing tasks, including opening files, reading files from the SSD, sending data to the GPU, starting the GPU for processing and returning the results to the CPU and then sending to the SSD.

With BaM, the new GPU is the center when it directly retrieves data and processes it instead of depending on the CPU. Thanks to greater bandwidth and data access speed along with more processing threads, the GPU’s computing power will be significantly improved compared to the old design. By allowing Nvidia GPUs to connect directly to the storage memory and then process the data, this solution is being deployed on the most specialized tools available today.

But how can these GPUs access data without support from the CPUs? In theory, BaM uses a software-managed GPU cache in addition to a library, where GPU threads can query data directly on the NVMe SSDs. The movement of information between them is handled by the GPUs.

Ultimately, this solution will make it possible for machine learning and more intensive tasks to access data faster and more importantly, in specific ways to help with high-volume workloads . In our tests, this solution has proven effective when GPU and SSD work well, data transfer and processing speeds are significantly faster than before.

Currently, the team is planning to open source its hardware and software design to help more machine learning community around the world.

Refer to Techradar

at Blogtuan.info – Source: genk.vn – Read the original article here